Blog-Eintrag vom Juni, 2022

Conference from - @ Grundlsee

https://www.ashpc.at/ - Indico (username: ashpc22-user, password: conferenceuser) - Abstracts - PDF

Attendees

Update from VSC

- Next generation Vienna Scientific Cluster (VSC5) will be fully available in Summer 2022. 20 GPU-Nodes are still missing.

- 710 CPU-Nodes

- 570 Nodes (512 GB)

- 120 Nodes (1 TB)

- 20 Nodes (2 TB)

- 60 GPU-Nodes

- 512 GB

- 2x NVIDIA A100

- AMD Epyc 7713 (4 NUMA, Cores: 64, Threads: 128, BaseClock 2.0 GHz, L3 Cache: 256 MB)

- INTEL compilers are still a good option for FORTRAN.

- Actually #301 in the Top500-List (95,232 Cores, 3.05 PFlops/s, 516 kW, e.g. VSC4-#82 (2019))

- we requested more storage for IMGW: 100TB in DATA.

- more storage will cause fees

- New JupyterHub + NoMachine (GUI, 4 Workstations)

- provide support for application rewriting/optimizing

- upcoming Future-Plan: connect Storage-System of JET to VSC

EuroHPC

talk by Vangelis Floros from EuroHPC JU

Euro-HPC: a legal and funding entity created in 2018 with headquarters in Luxembourg. Bundle HPC in Europe. follows PRACE.

For more information see: PDF

NCC - Austria

talk by Sarah Stryeck

A National Competence Center for High-Performance Computing, High-Performance Data Analytics and Artificial Intelligence.

What to use EuroHPC Resources? They advise and help with proposals!

Contact: info@eurocc-austria.at / https://eurocc-austria.at/

Note: Support for Software-Development can be provided directly from the EuroHPC-Centers (some showcases).

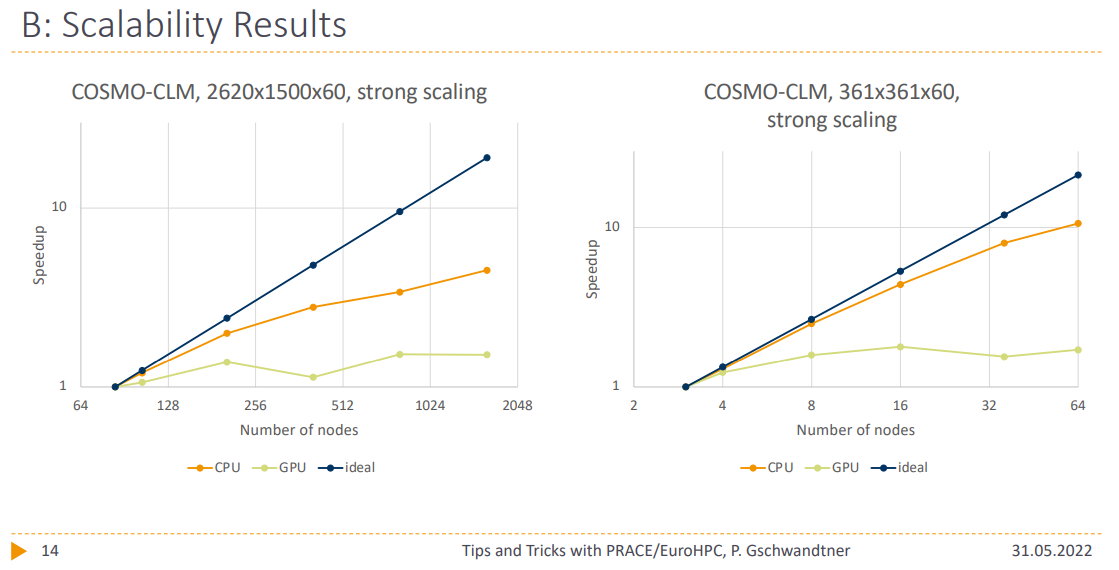

Tips and tricks from the user perspective with PRACE/EuroHPC applications

talk by Philipp Gschwandtner from University of Innsbruck

Motivation for proposals:

- PRACE/EuroHPC proposals are considerable effort, but high pay-off

- not as difficult as might think → PRACE acceptance rates are quite high

- Ingredients for a successful PRACE/EuroHPC access proposal

- Scientific Problem + Solution

- Fast and scale-able implementation

- A pinch of luck

- Let it simmer for a few weeks

- Enjoy!

- WE can ask him for SUPPORT / ADVISE too

Contact: philipp.gschwandtner@uibk.ac.at

One awarded project was kmMountains: a structured grid simulation of climate change in mountainous landscapes (Alps, Himalaya)

For more information see: PDF (Talk by Emily Collier from 2021, PDF)

LEONARDO: current status and what it is to us

talk by Alessandro Marani from CINECA

Specifications & Current Status:

Further steps: HW-Installation in 07/2022 and configuration and testing completed with 12/2022

Total investment: 240 Mio. €, EU-Contribution: 120 Mio. €

Note: same building as Atos BullSequana (HPC-System of ECMWF)

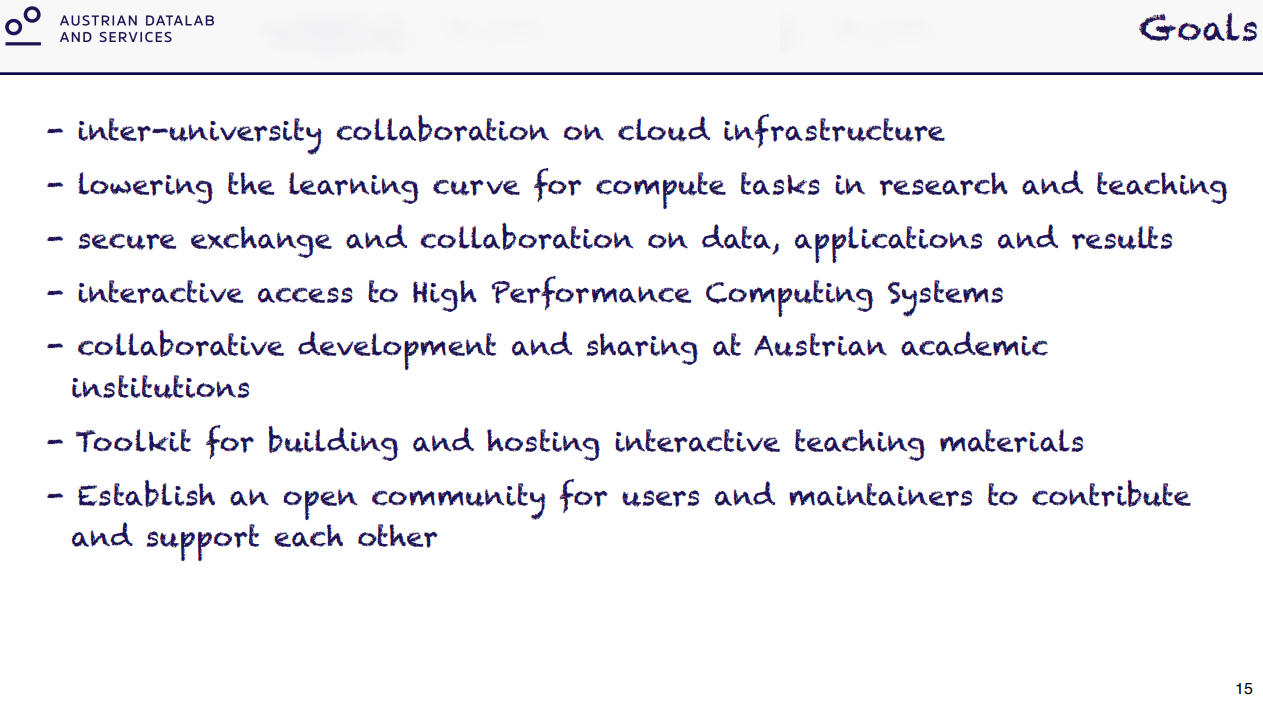

Austrian DataLAB and Services

talk by Peter Kandolf from University of Innsbruck/TU Vienna

DataLab of all Austrian Universities; affected parts are research, teaching, ZIDs; funded by BMBWF + all participating universities (2020-2024)

Further information: ADLS (austrianopensciencecloud.org)

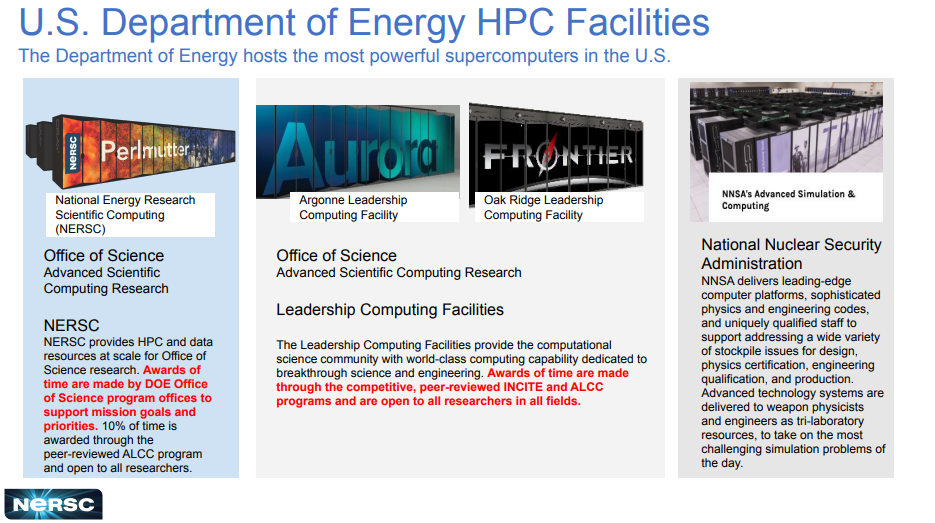

Perlmutter and HPC in the U.S. Department of Energy Office of Science

talk by Richard Gerber from NERSC

Note: massive support for Software-Development on GPUs (Hackatons, Companies, Scientists, ...)

Total investment: 600 Mio. $ (just Perlmutter)

For more information see: PDF

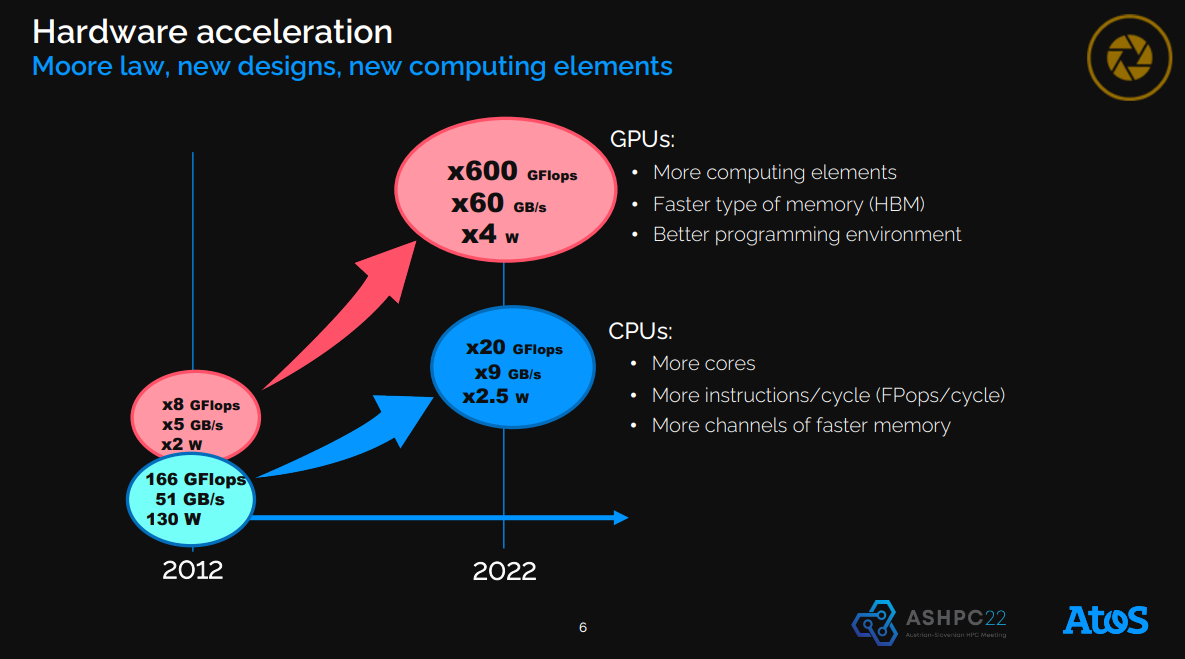

HPC in the Exascale era and beyond

talk by Jean-Pierre Panziera from ATOS

Comparison of GPUs/CPUs & Usage of AI for NWP (ECMWF)

For more information see: PDF, About ECMWF's new data center in Bologna: ECMWF Newsletter 22

Next ASHPC

The next ASHPC-Conference is expected to take place in the first week of June, 2023 in Maribor, Slovenia.

Please save the date for ASHPC23: June 13-15, 2023!