Blog

In addition to the already well-known climate lab, which once again highlighted what is behind climate change and what it all has to do with physics, this year there was also a contribution from geophysics in which the interior of the earth was explored in more detail. Through vivid explanations of our team, the interest of the children was quickly awakened, so that there were also exciting questions. The children especially enjoyed the various experiments, such as the sea ice melting experiment or the triggering of a mini-earthquakes. In cooperation with institutes of our faculty we organized a puzzle rally through the UZA2 and showed the children the diversity of our faculty. As every year, we thank all participants and supporters and look forward to seeing you again!

On behalf of the Institute of Meteorology and Geophysics, we wish you another great summer!

It has been really really hot on Monday, when we started with the Kinderuni and we had no chance but to do the experiments inside. Nobody wanted to be outside in the sun! Clearly there is not enough shadow around our building.

Impressions |

||

|---|---|---|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

Thanks for jumping with me!!!

Dear Interested,

Please find some IT updates below. Report any issues or questions to it.img-wien@univie.ac.at

Upcoming Events

- ☁️☁️☁️

wolke.img.univie.ac.atreplacessrvx1.img.univie.ac.atas Landing Page 🥳- Links on

srvx1.img.univie.ac.at/???are gone by

- Links on

- Upcoming Maintenance in Summer:

- Webserver switch Wolke - SRVX1,

- Network speed upgrade form 10GbE to 25GbE, -

- JET Cluster Upgrade

- SRVX8 gets a GPU, in summer this will be the new student hub server

- New Server Aurora:

aurora.img.univie.ac.at - Quotas on SRVX1

- New user restrictions on JET

- ECMWF has a very useful new open data access/charts

- WLan Vouchers (eduroam for guests), request via ServiceDesk

- Gitlab has more runners for CI.

New Landing page

Please use wolke.img.univie.ac.at from now on. Users will redirect to the servers running these services, but hidden to the user. This allows for maximum flexibility to move services from server to server.

| new address / subdomain | old address | service | comment | start |

|---|---|---|---|---|

jupyter.wolke.img.univie.ac.at | jet01.img.univie.ac.at | Research Jupyter Hub on JET |

| |

teaching.wolke.img.univie.ac.at | srvx1.img.univie.ac.at/hub | Teaching Jupyter Hub on SRVX1 | migrates to SRVX8 |

|

webdata.wolke.img.univie.ac.at | srvx1.img.univie.ac.at/webdata | Web file browser on SRVX1 | upgrade to new version on | OPERATIONAL |

secure.wolke.img.univie.ac.at | srvx1.img.univie.ac.at/secure | Message encryption on SRVX1 | migrates to DEV |

|

filetransfer.wolke.img.univie.ac.at | srvx1.img.univie.ac.at/filetransfer | Commandline file transfer |

| |

uptime.wolke.img.univie.ac.at | srvx1.img.univie.ac.at/status | Status of IT services | migrates to DEV | OPERATIONAL |

ecaccess.wolke.img.univie.ac.at | srvx1.img.univie.ac.at/ecmwf | ECAccess local gateway | upgrade to containerized version |

|

library.wolke.img.univie.ac.at | srvx1.img.univie.ac.at/library | iLibrarian digital Library | upgrade to new version |

|

Jet Cluster 🖧

Please be reminded that JET01/02 are not meant for computing, use SRVX1/8/Aurora for interactive computing.

JET01/JET02 have now stricter rules for user processes (max 20GB, max 500 processes). In Autumn when the JET Upgrade will most likely happen, the two login nodes will become fully available to the users. no restrictions anymore.

Software 📝

gnu-stack - Combination of multiple packages (cdo, eccodes, nco, ...) build with on compiler GCC 8.5.0

intel-stack - Combination of multiple packages (cdo, eccodes, nco, ...) build with on compiler intel-oneapi 2021.7.1

mayavi 4.8.1 - 4D scientific plotting

rttov 12.2 - RTTOV library + python interface. GCC 8.5.0

SRVX🖳

Will need to be renamed after summer.

Software 📝

dwd-opendata 0.2.0 - Download DWD ICON open data, forecasts, analysis

nwp 2023.1 - NWP Python distribution (enstools, ...)

gnu-stack - Combination of multiple packages (cdo, eccodes, nco, ...) build with on compiler GCC 8.5.0

intel-stack - Combination of multiple packages (cdo, eccodes, nco, ...) build with on compiler intel-oneapi 2021.7.1

cuda 11.8.0 - NVIDIA CUDA library and utils

Hardware 🧰

Installation of a Nvidia GPU GTX 1660 (6 GB Memory) in SRVX8 to allow for graphical computing using e.g. paraview or mayavi.

You can monitor the execution on a GPU using: nvidia-smi -l

Example of running a job on a GPU vs. on CPU, Python example: gpu-stress.py

# load a conda module, e.g. micromamba you@srvx8 $ module load micromamba # setup an environment and install required packages you@srvx8 $ micromamba create -p ./env/gputest -c conda-forge numba cudatoolkit # run example on CPU and GPU you@srvx8 $ ./env/gputest/bin/python3 gpu-stress.py --------------- Add 1 to a array[1000] on CPU: 0.0002308s on GPU: 0.1826s Add 1 to a array[100000] on CPU: 0.02366s on GPU: 6.91e-05s Add 1 to a array[1000000] on CPU: 0.2343s on GPU: 0.0008399s Add 1 to a array[10000000] on CPU: 2.299s on GPU: 0.009334s Add 1 to a array[100000000] on CPU: 23.14s on GPU: 0.09309s Add 1 to a array[1000000000] on CPU: 229.9s on GPU: 0.9586s ---------------

obviously that is just an example, but it demonstrates how parallel computing might help with some of your problems. Please let me know if you have other examples or need help to implement code changes.

Quotas🧮

Please be reminded that strict quotas need to be enforced to ensure that the backup procesure is much more responsive than it used to be.

As described in Computing (HPC, Servers) there are the following storage space restrictions:

| Name | HOME | SCRATCH | Quota limits enforced ? | comment |

|---|---|---|---|---|

| SRVX1 / SRVX8 | 100 GB | 1 TB | 01.09.2023 | Staff, Exceptions can be granted. |

| SRVX1 | 50 GB | - | 01.09.2023 | Students |

| JET | 100 GB | - | YES | |

| VSC | 100 GB | 100 TB | YES | share between all users, number of files matter too, 2e6 number of files |

Note: Students will only be given 50 GB on HOME, no SCRATCH

For Teaching on SRVX1 use: /mnt/students/lehre (request a directory for your course to share with students)

VSC🖧

There has been a major software stack change on VSC. They separated the software stacks by architecture/VSC. So a different for VSC4 and VSC5.

Please check that your paths are correct. Module names have changed once again. Some more infos on VSC Wiki.

Software 📝

eccodes 2.25 - with fortran extension on VSC5

Please have a look a our new VSC project page and add your project / usage statistics. Thanks.

There are still problem with VSC modules, not setting CPATH and LIBRARY_PATH correctly.

VPN

Being informed by ZID, from 3rd July on MFA (multifactor authentication) will be mandatory for VPN! More information on our Wiki (MFA - Multifactor Authentication). There are two choices, using a YubiKey or using an authenticator app.

MS365 vs. Office 2021

You have now the opportunity to choose between the cloud version MS365 (known as "MS office suite") or MS Office 2021 (local "on premise" installation).

In principle it is up to the user, which kind of office will be chosen. Some more infos can also be found in our wiki.

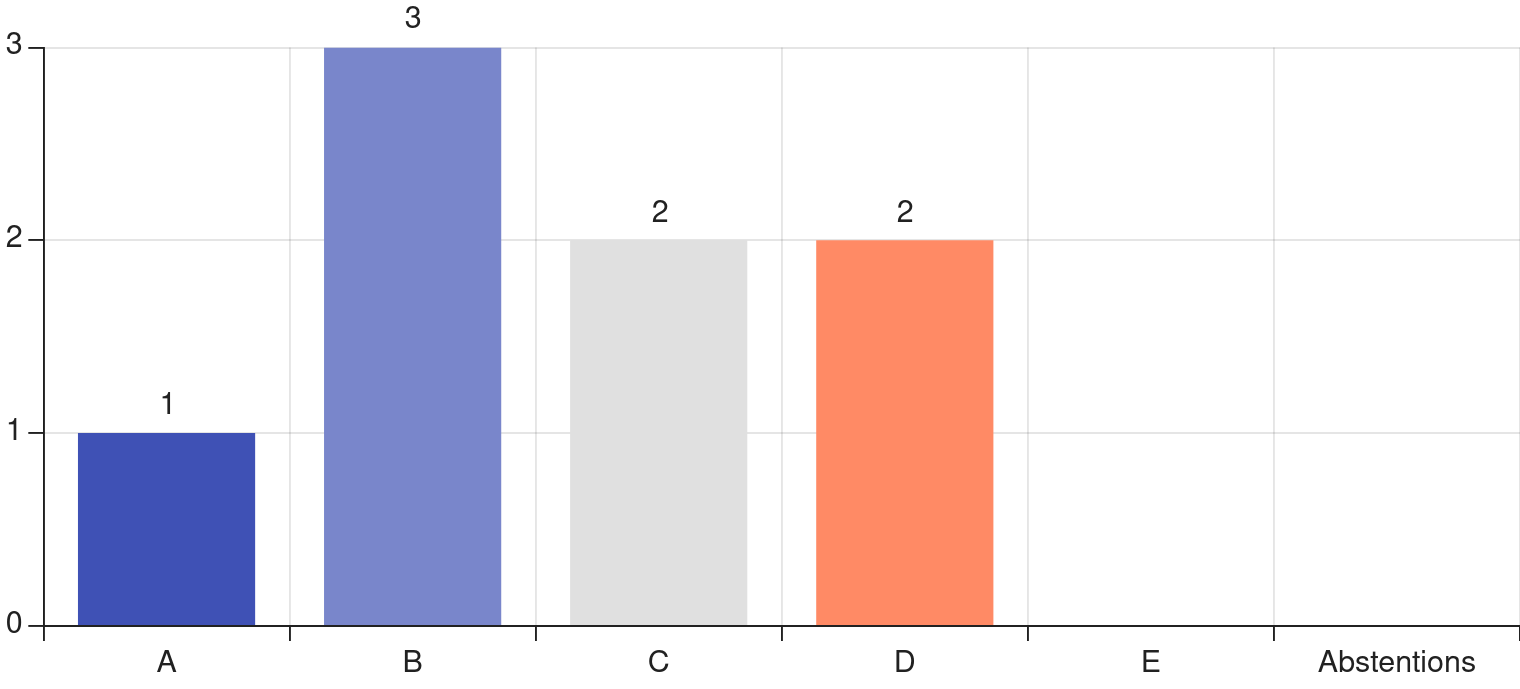

On Tuesday January 24 2023 about 10 people from IMG met with colloquium speaker Cyril Brunner to discuss our views on carbon compensation. A couple of survey questions started off the discussion led by Brunner Lukas. As it turned out about half of the participants had personally used a compensation scheme before to make up for CO2 emissions. Overall the attitude towards compensation was somewhat mixed in the room as the survey results below show

Compensating emissions is a good thing overall.

A Strongly agree

B Somewhat agree

C Neither agree or disagree

D Somewhat disagree

E Strongly disagree

Some further discussion revealed that most participants see the option to remove CO2 as an integral part of achieving carbon neutrality and as positive in principle. But several issues in the practical implementation remain open leading to this result. One prominent recent example that shows the real world problem is a scandal that was revealed by a network of journalists (e.g., https://www.theguardian.com/environment/2023/jan/18/revealed-forest-carbon-offsets-biggest-provider-worthless-verra-aoe). Another discussion was centered about choosing the most cost efficient option, versus reliable or trustworthy options. Finally, Cyril also laid out some of the differences between compensating emissions by avoiding them at another place (which can never truly lead to net-zero) versus active carbon removal for example by direct air capture.

Another topic which was discussed was who should be responsible for emissions in a work related context:

If I have to fly for work my employer should compensate my emissions.

A Strongly agree

B Somewhat agree

C Neither agree or disagree

D Somewhat disagree

E Strongly disagree

One argument was, that for many of our travels the decision to go or not go lies with ourselves to a large degree which could mean that we personally have a certain responsibility. But overall we agreed that ultimately it should be the employer paying for compensation. University of Vienna does is currently working on a sustainability roadmap (https://nachhaltigkeit.univie.ac.at/en/sustainability-strategy/roadmap-for-climate-neutrality/) but at least in their communication of actions they may need to improve:

The University of Vienna already compensates emissions from air travel.

A Yes

B No

C I don't know

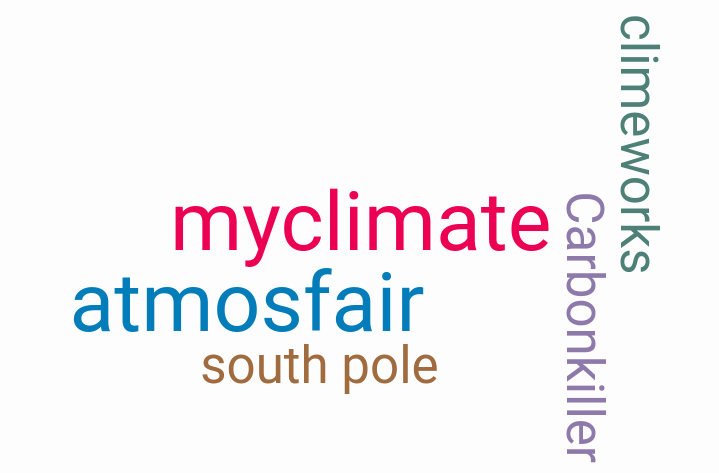

Finally, we collected companies that offer compensation options and discussed some of their advantages and prices:

What options for compensation (that you would recommend) do you know?

Thanks for all participants for an interesting and engaged discussion, in particular to our guest Cyril Brunner!

short summary: Prof. Götz Bokelmann and i took advantage of the lesson-free holidays and made a one day trip to Falkenstein in Lower Austria (station A001A) and Gbely in Slovakia (A333A).

pic1: map with the two destinations

Trip diary from 29.12.2022

forecast for this day: sunny, partly cloudy, temperatures up to 4°C, real conditions: very volatile in the morning (from cloudy to foggy and sunny), but in the afternoon maily sunny. Temperatures above 4°C.

Falkenstein A001A

The journey to Falkenstein was a bit difficult. We went the A22 in direction north and left the exit Sierndorf. The weather conditions always changed, sometimes there was a very dense fog. But at arrival in Falkenstein there was in southern direction sun and from the northern part a heavy cloud. Up the hills in the wineyards near the castle, there is the station hidden in an WW2 firing position. The reason of our visit there was because the power was always temporarly going off. There is no power supply, so all the power come from two solar panels in different locations feeding two 100 Ah batteries in the station itself. Visiting one of the panels was not very easy, it´s hidden deep in the bushes, but all two look intact, as well as the wiring. A voltage test in the station confirmed this estimation. So the panels were good, we had to replace only the two heavy lead-batteries.

After all routine tasks we pack our things and left to Slovakia.

pic2: stock photo Ruine Falkenstein | pic3: firing slot | pic4: station instruments in the building |

|---|

pic5: wineyard view from near the station entrance A001A

Gbely A333A

In Hohenau near the border we took a small break for fuel, buying lunch and call to Petr Kolinsky. He managed to call the slovakian station owner for he comes and opens it. It is located in an old distillery. The main task there was to replace the modem and test it, because it is the first AVM-modem in SK. So after a small break near the border we soon arrived there and the owner was already waiting there for us. Inside an old garage in the building, there is the station and many stuff is stored there too. The difficulty there is to get across all the stuff for the maintenance. We installed the new modem, a power multi connector and changed the disks. A levelling of the sensor was nearly not necessary, only calibration and testing. The good thing was, that the test with the AVM-modem worked in SK without issues. So we can start implement this device on all slovakian stations in the near future.

pic6: Prof. Bokelmann inspecting the station A333A | pic7: road to Gbely |

|---|

...stay tuned!

I was able to visit the second week of the 27th UN Climate Conference, the Conference of the Parties (COP27) in Sharm El Sheikh, Egypt as a youth representative of the the European Forum Alpbach. As a climate scientist who firmly believes in acting based on the best available science my experience there was a somewhat sobering one. What I observed at the negotiations and what different delegates and observers told me was that decisions are mainly based on political considerations rather than scientific facts. Yet, in particular the increasing inclusion of also the young generation was something that might change this dynamic.

You can read some more reflections at a blog post for University of Vienna or at a text I wrote to together with the three other scholarship holders

at the European Forum Alpbach FAN voices blog.

Main entrance to the blue zone of COP.

Chat with Thomas Zehetner (climate spokesperson WWF Austria).

Intervention in the party plenary by a youth representative from Ghana.

Silent youth protest (shouting was not allowed) in front of the main negotiation hall.

Quo vadis climate?

Conference from - @ Grundlsee

https://www.ashpc.at/ - Indico (username: ashpc22-user, password: conferenceuser) - Abstracts - PDF

Attendees

Update from VSC

- Next generation Vienna Scientific Cluster (VSC5) will be fully available in Summer 2022. 20 GPU-Nodes are still missing.

- 710 CPU-Nodes

- 570 Nodes (512 GB)

- 120 Nodes (1 TB)

- 20 Nodes (2 TB)

- 60 GPU-Nodes

- 512 GB

- 2x NVIDIA A100

- AMD Epyc 7713 (4 NUMA, Cores: 64, Threads: 128, BaseClock 2.0 GHz, L3 Cache: 256 MB)

- INTEL compilers are still a good option for FORTRAN.

- Actually #301 in the Top500-List (95,232 Cores, 3.05 PFlops/s, 516 kW, e.g. VSC4-#82 (2019))

- we requested more storage for IMGW: 100TB in DATA.

- more storage will cause fees

- New JupyterHub + NoMachine (GUI, 4 Workstations)

- provide support for application rewriting/optimizing

- upcoming Future-Plan: connect Storage-System of JET to VSC

EuroHPC

talk by Vangelis Floros from EuroHPC JU

Euro-HPC: a legal and funding entity created in 2018 with headquarters in Luxembourg. Bundle HPC in Europe. follows PRACE.

For more information see: PDF

NCC - Austria

talk by Sarah Stryeck

A National Competence Center for High-Performance Computing, High-Performance Data Analytics and Artificial Intelligence.

What to use EuroHPC Resources? They advise and help with proposals!

Contact: info@eurocc-austria.at / https://eurocc-austria.at/

Note: Support for Software-Development can be provided directly from the EuroHPC-Centers (some showcases).

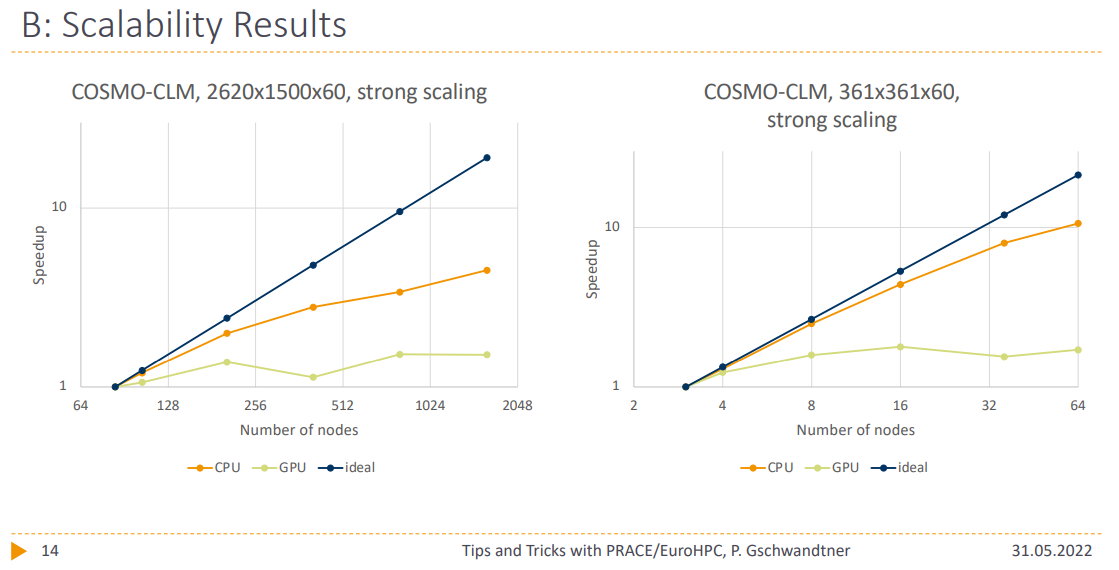

Tips and tricks from the user perspective with PRACE/EuroHPC applications

talk by Philipp Gschwandtner from University of Innsbruck

Motivation for proposals:

- PRACE/EuroHPC proposals are considerable effort, but high pay-off

- not as difficult as might think → PRACE acceptance rates are quite high

- Ingredients for a successful PRACE/EuroHPC access proposal

- Scientific Problem + Solution

- Fast and scale-able implementation

- A pinch of luck

- Let it simmer for a few weeks

- Enjoy!

- WE can ask him for SUPPORT / ADVISE too

Contact: philipp.gschwandtner@uibk.ac.at

One awarded project was kmMountains: a structured grid simulation of climate change in mountainous landscapes (Alps, Himalaya)

For more information see: PDF (Talk by Emily Collier from 2021, PDF)

LEONARDO: current status and what it is to us

talk by Alessandro Marani from CINECA

Specifications & Current Status:

Further steps: HW-Installation in 07/2022 and configuration and testing completed with 12/2022

Total investment: 240 Mio. €, EU-Contribution: 120 Mio. €

Note: same building as Atos BullSequana (HPC-System of ECMWF)

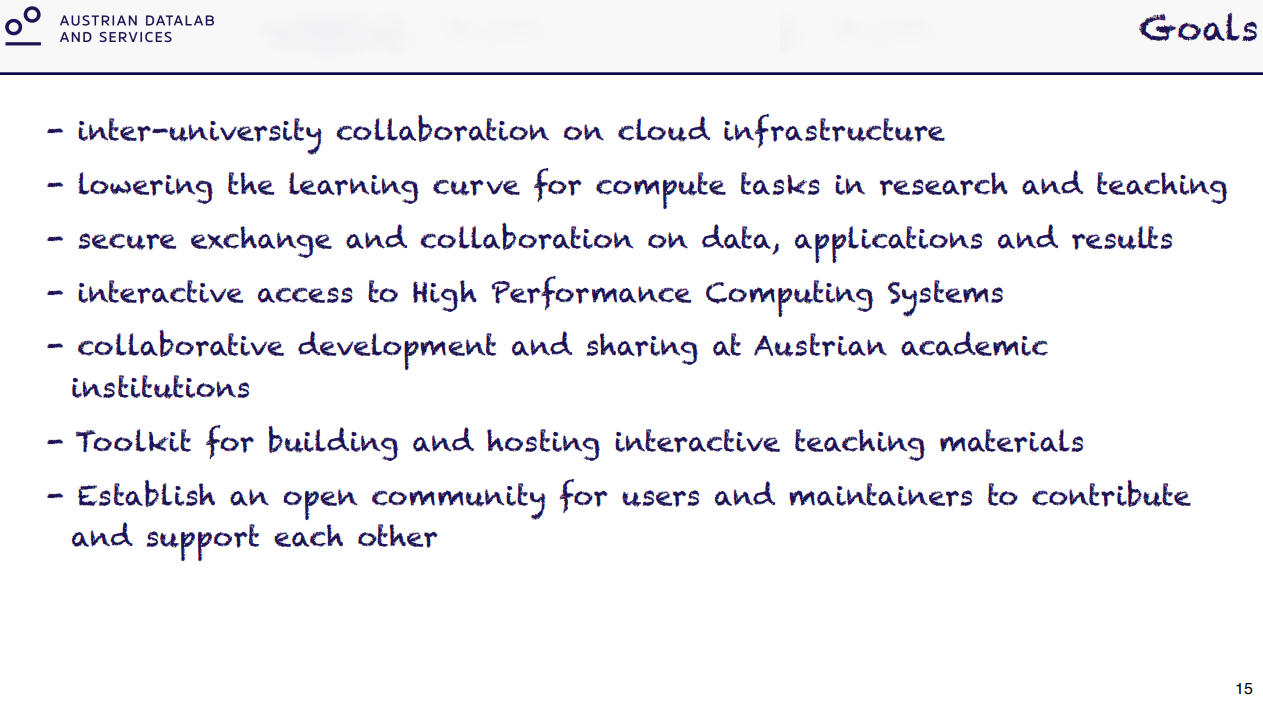

Austrian DataLAB and Services

talk by Peter Kandolf from University of Innsbruck/TU Vienna

DataLab of all Austrian Universities; affected parts are research, teaching, ZIDs; funded by BMBWF + all participating universities (2020-2024)

Further information: ADLS (austrianopensciencecloud.org)

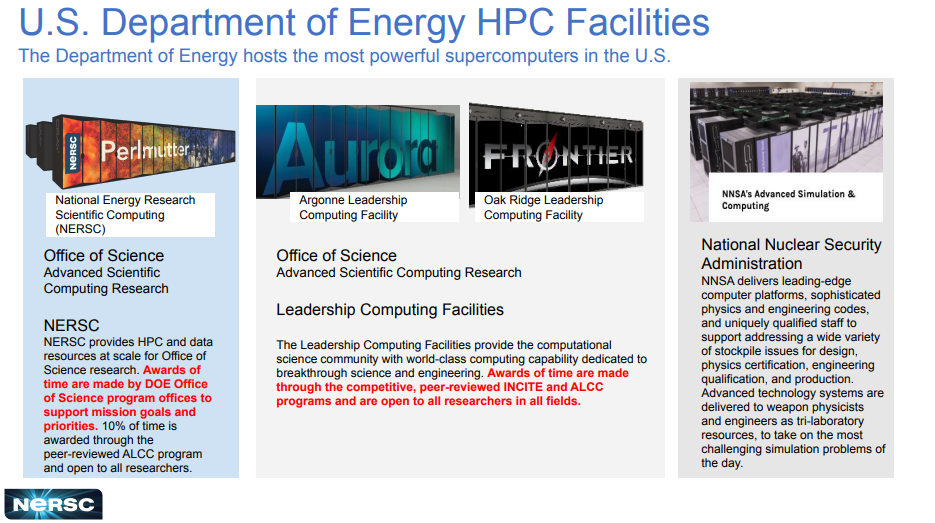

Perlmutter and HPC in the U.S. Department of Energy Office of Science

talk by Richard Gerber from NERSC

Note: massive support for Software-Development on GPUs (Hackatons, Companies, Scientists, ...)

Total investment: 600 Mio. $ (just Perlmutter)

For more information see: PDF

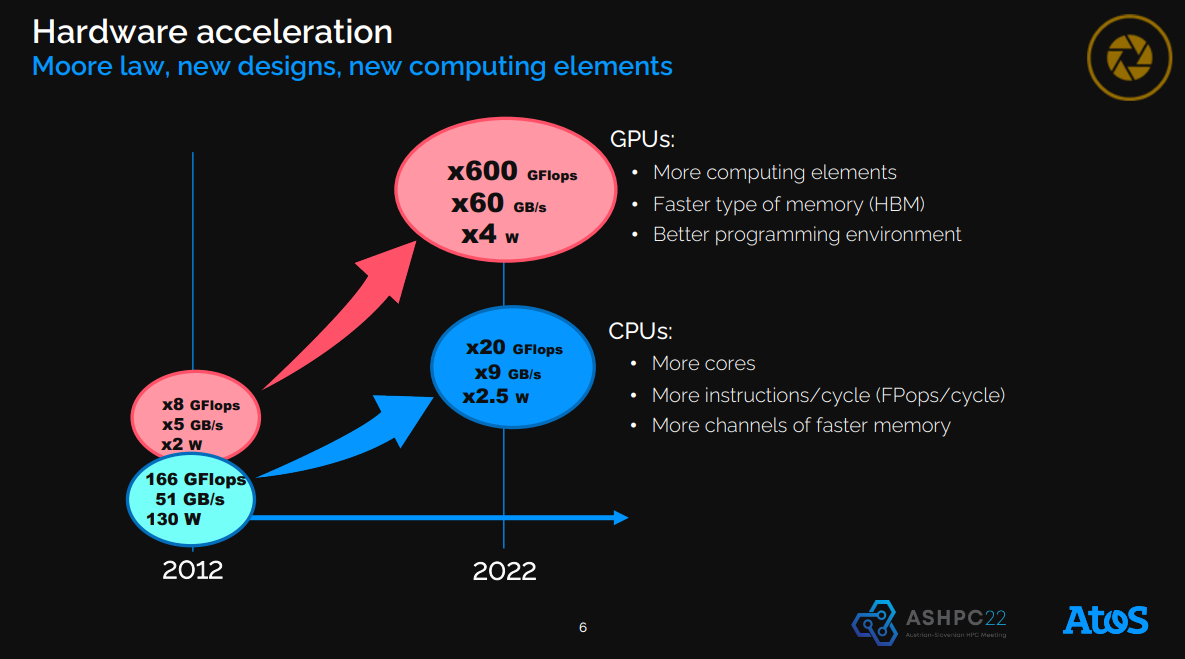

HPC in the Exascale era and beyond

talk by Jean-Pierre Panziera from ATOS

Comparison of GPUs/CPUs & Usage of AI for NWP (ECMWF)

For more information see: PDF, About ECMWF's new data center in Bologna: ECMWF Newsletter 22

Next ASHPC

The next ASHPC-Conference is expected to take place in the first week of June, 2023 in Maribor, Slovenia.

Please save the date for ASHPC23: June 13-15, 2023!